Selected Projects

Gen-3 Alpha Video-to-Video

I worked on a new implementation for our video-to-video product at Runway based on the latest and greatest Gen-3 Alpha model. This included training the model and running experiments to figure out the best form of conditioning to ensure proper video-to-video transfer can occur and that consistency is adequate. I’m particularly excited about this model due to the control it offers and because of how it relates to computer graphics rendering.

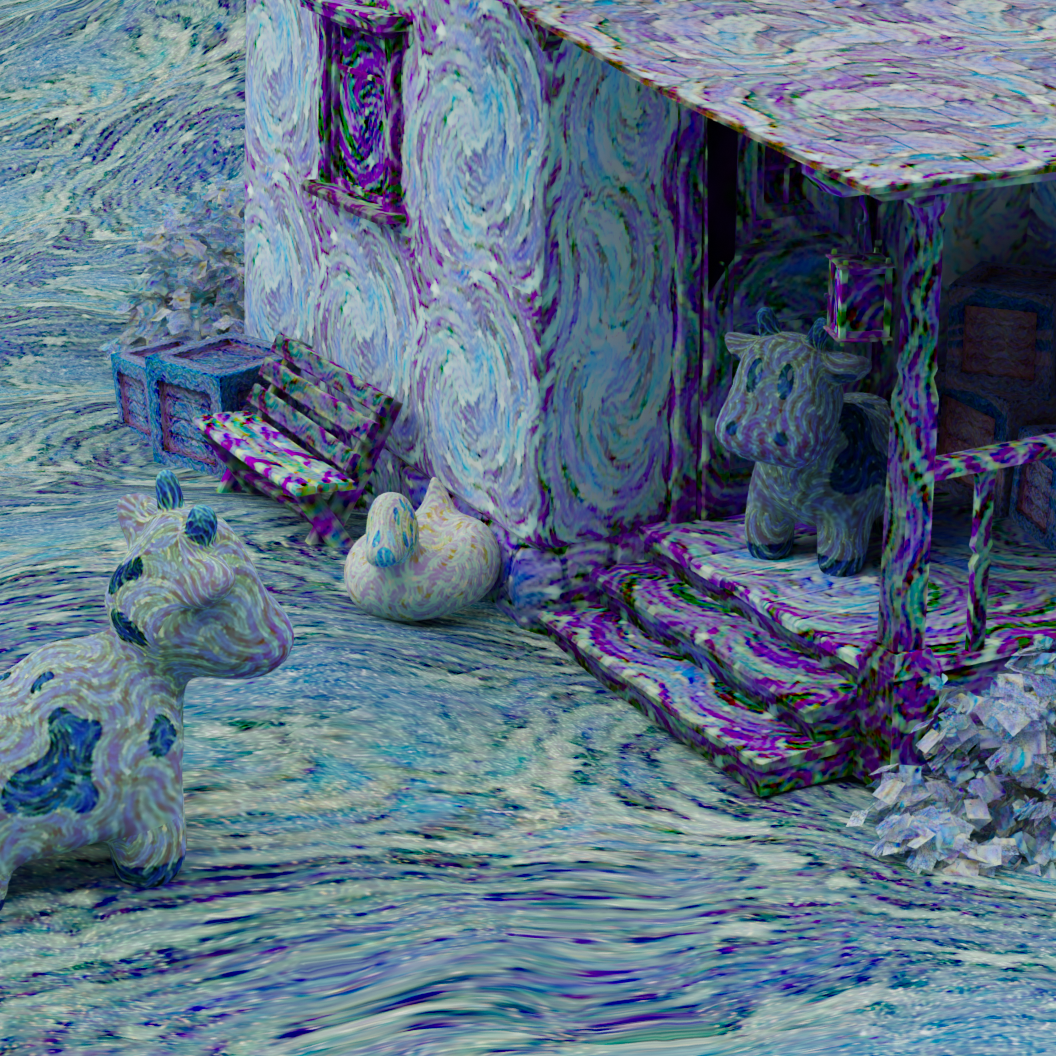

CLIP-based NNFM Stylization for 3D Assets (EG 2023)

Shailesh Mishra joined NVIDIA as an intern for the summer under my supervision in the Applied Deep Learning Research team and we chose to work on 3D asset stylization. We extended "neural neighbor feature matching” to work with CLIP-ResNet50’s feature maps and also allow using multiple style images during optimization. In addition, we also enable artistic control using a color palette loss that can guide training towards other swatches of colors.

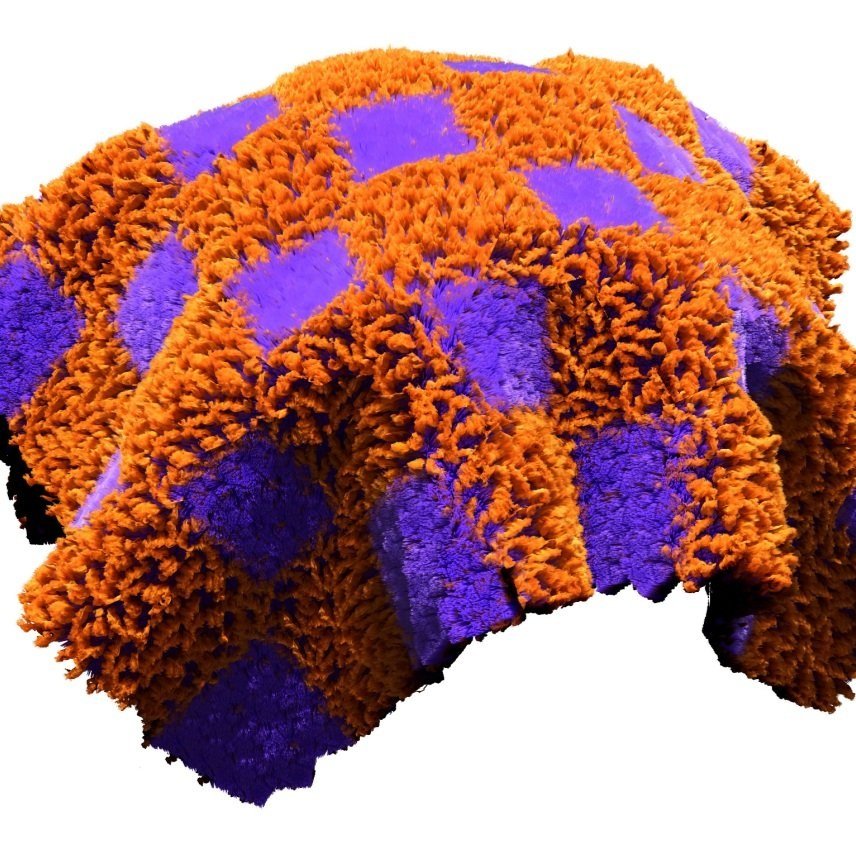

NeRF-Tex (Computer Graphics Forum 2022)

Hendrik Baatz’s project started out in his Master’s thesis that I helped supervise and later continued during an internship at NVIDIA Research. This approach presents a method of using neural fields for applying “mesoscale” materials, such as fur and hair, onto 3d objects. We trained the fields on patches of fur that are artistically controllable, and then during inference these patches are instanced onto the surfaces of objects to give them a specific appearance. The project was first presented at EGSR 2021 and later published in CGF 2022.

Neural Scene Graph Rendering (SIGGRAPH 2021)

A project that I started working on near the end of my internship with NVIDIA and continued after starting my permanent position with the Applied Deep Learning Research team. The paper presents a new neural scene representation inspired by traditional scene graphs that is both controllable and scalable. In addition to this, it offers some nice generalization. We focused on simpler scenes to make the problem easier at this stage, but the hope is that these neural representations enable inputting whole graphics scenes to neural networks.

Compositional Neural Scene Representations for Shading Inference (SIGGRAPH 2020)

This work started out during my Master’s thesis and eventually expanded into an internship at NVIDIA in Zurich. We explored how learned neural scene representations could be used to augment traditional graphics pipelines. The main focus was on providing new methods for improving the interpretability of the representations. Supervised by Jan Novák, Fabrice Rousselle and Marios Papas.